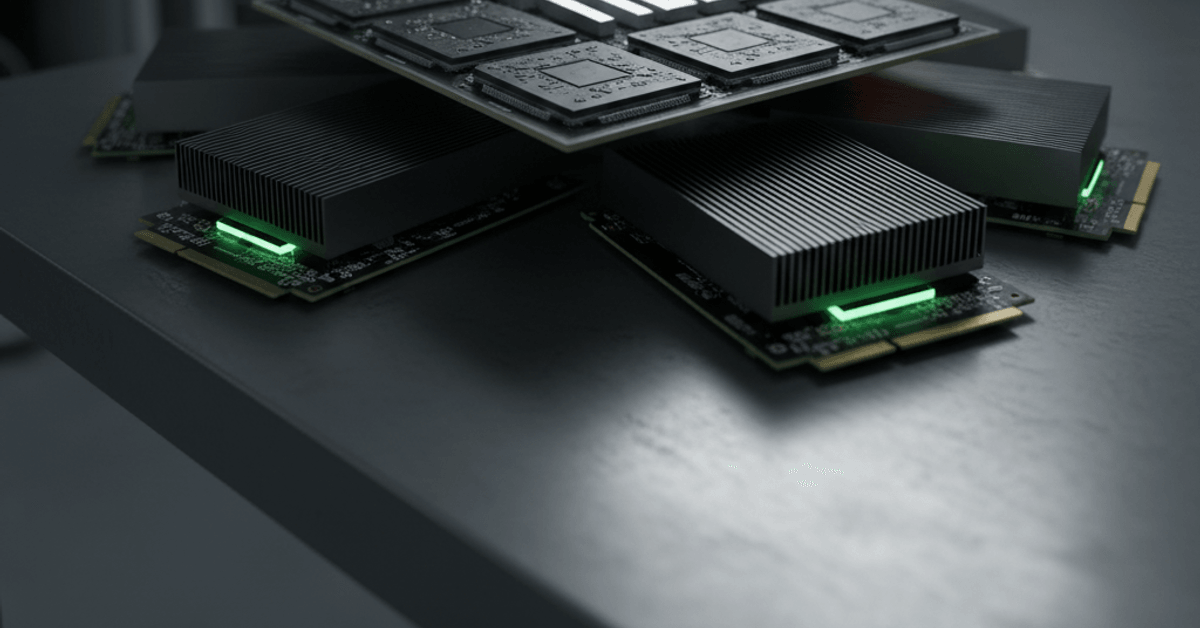

NVIDIA CUDA 13.1 launches Tile model, boosts speed

NVIDIA CUDA 13.1 launched with CUDA Tile and sweeping developer upgrades, marking a major productivity jolt for AI teams. The release expands performance tooling, resource control, and portability across GPUs. Meanwhile, other AI productivity shifts emerged in web access, finance infrastructure, and health diagnostics. NVIDIA CUDA 13.1 highlights CUDA 13.1 introduces CUDA Tile, a tile-based […]